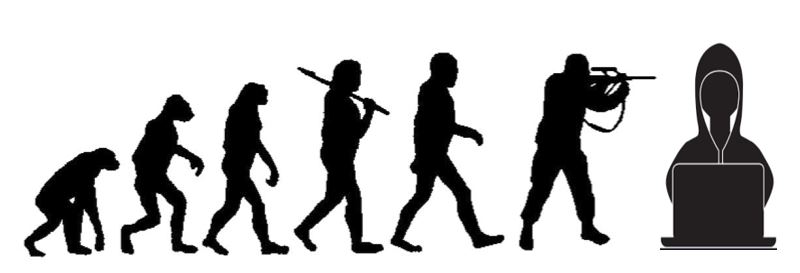

Technological evolution towards the future of Social Engineering

- Details

- Category: Social Engineering

- Published: Wednesday, 12 September 2018 10:04

Written by Davide Andreoletti, SUPSI and Nathan Weiss, IAI ELTA Systems

The DOGANA project has allowed the participant researchers to reach a deep understanding of the current Social Engineering landscape. As the project is approaching its end, we believe it is time to write an article about our expectations regarding the future of this huge field. As an interdisciplinary topic grounded in both computer science and social studies, the evolution of Social Engineering is influenced by many different factors: societal changes, advancements of psychological disciplines and, clearly, the evolution of technologies are some key elements that are transforming the way people perform (respectively, defend from) a social engineering attack.

In this article, we focus on the latter factor. Based on their widespread diffusion and on their overall impact in many aspects of our life, we select the following technologies: Internet of Things (IoT), Online Social Networks (OSNs) and Artificial Intelligence (AI). For the sake of simplicity, we just touch some of the most relevant aspects of each technology with the aim to answer to two main questions: which advantages do these technologies give to an attacker? And, on the other hand, which are the benefits brought to the defender?

Internet of Things: many tools used on a daily basis are being replaced by their IoT counterparts[1]. This change of paradigm is expected to have a strategical influence on both domestic and industrial environments. The main characteristics of IoT devices are the following:

- an increased number of traditionally standalone devices (e.g., oven and personal watch) is nowadays connected to the Internet

- sensitive information (about a user or an operational process in industry) are outsourced to the cloud for further analysis and to allow tailored services

- IoT devices are generally constrained in resources and this affects their security (e.g., the use of strong cryptographic solutions is often prevented)

Online Social Networks: OSNs are on-line platforms where users create their own profiles and form communities of friends. Among the main characteristics of OSNs there are:

- very detailed profiles of users make available sensitive information such as interests, political view, friendships, etc…

- OSNs are expected to be the primary medium of interaction and the main source of information for an increasing number of people[2]

- OSNs are based on the following/friendships paradigm, which facilitates the interaction between potentially fake identities and legitimate users. For example, users form communities to share some common interest, and this makes easier to gain the trust of a potential victim.

Artificial Intelligence: AI is a revolutionary set of techniques and methods that aim to make the computers able to perform operations without being explicitly programmed. The ability to learn and adapt to the surrounding environment makes AI algorithms a game changer technology in quite every aspects of our life. This technology attempts to emulate the abstraction process performed by the human brain, and this allows to efficiently perform the following operations:

- data analysis that infers useful information from unstructured data (e.g., images, video, sound and text)

- data generation; AI algorithms can infer a model from data and generate new data according to it: for example, an AI algorithm can compose music and text based on a learned style

Let’s now see how these technologies can benefit a social engineer. Social Engineering is commonly defined as a process that manipulates a victim into doing something that she would not do on purpose, such as performing a specific action or revealing some important information[3]. To this aim, an attacker generally i) gathers information about a potential victim (see our post about the case of Cambridge Analytica) and ii) approaches the victim to perform the attack (see our post about persuasion techniques and phishing).

Information gathering

The wide employment of IoT devices has caused the availability of an unprecedented volume of personal data. As these devices generally suffer from a limited security, the attacker can exploit them much more easily than other appliances (e.g., desktop computers). In addition, users often publish on OSNs sensitive information captured by their IoT devices (e.g., about fitness activities), thus extending their digital shadow beyond pictures, interests and written posts. In this context, the ability of today’s AI algorithms to infer useful information from large amount of (mostly) unstructured data is the cherry on the cake for a social engineer. In fact, AI software is rapidly advancing towards the ability to correlate large amount of information and to perform also psychologically-oriented tasks. Just think of the humanoid robot Sophia[4], who is able to infer emotions by reading facial expressions and learn how to react accordingly. This definitely benefits from the huge amount of visual information related to a person in OSNs and can be used to automate the information gathering phase to a vast amount of users, which can be profiled up to a very detailed emotional level.

Attack

Once the victim has been profiled, the social engineer chooses the most suitable vector to attack her. The inherent insecurity of many IoT devices can help a social engineer also in this phase. For example, a victim can be induced by a phishing message displayed on a domestic IoT device to perform dangerous operations. Notice that IoT devices operate in both the cyber and the physical spaces, and this further increases the disruptive effects of an attack (e.g., an industrial process can be compromised). OSNs allow to easily hide an identity behind fake profiles, as well as to come in contact with a person (e.g., by means of chat services offered by the OSN platform). Finally, AI technologies equipped with generative functionalities can engage realistic conversations with the victim (see again the abilities of the robot Sophia4). This generative ability can be extended to text and vocal messages, which opens the doors for automated and scalable phishing[5] and vishing (i.e., phishing made with voice) campaigns. For example, the Canadian startup Lyrebird[6] has created an algorithm able to learn and reproduce the speech of every person, with the possibility to infuse the speech with emotions under the control of the attacker. Please notice that these technologies can also be potentially used, with a very limited human intervention, to create realistic fake news that are easily spread on OSNs.

As far as the defense side is concerned, there are two main possible approaches: i) application of awareness methods to teach a user how to behave to avoid becoming a victim and ii) attack detection.

Awareness methods

IoT devices generally perform few operations and their attack surface is therefore limited. This fact can be exploited to create awareness campaigns specifically tailored to the role of the device itself. In this way, the users can easily understand the risks associated with a particular device. OSNs represent a powerful medium to spread awareness campaigns in various forms (e.g., quizzes or games) and reach a very large audience. Moreover, with the help of AI methods to profile a user, we expect future OSNs to be a medium for the application of awareness methods tailored to a user or to an entire community.

Attack Detection

IoT devices are equipped with sensors able to collect information about their environment. As a social engineer can use the physical contact to perform her attacks, we expect the environment of the future to be increasingly smart in the detection of suspicious behaviors (e.g., dumpster diving[7]). OSNs represent a huge source of information that can be analyzed and correlated to detect an attack. The detection can span both the attack itself (e.g., users’ profiles are scanned in search for phishing attempts) and the period after the attack (e.g., sudden changes in the usage patterns of the OSN that can reveal that an attack has been performed). Finally, the analytical capabilities of AI are expected to play a significant role in the detection of behaviors that humans may not notice (e.g., recognize if a vocal message is true or artificially crafted).

As a conclusion, we believe that these three technologies are lowering the barrier of entrance to the black market of social engineering. In fact, the proliferation of information made available by IoT[8] and OSNs and the evolution of analytical/generative AI techniques are creating the perfect environment also for unskilled attackers. We envision the development of a cyber black market where many social engineering operations are sold as a service (e.g., emotion recognition as a service, or see our post about phishing as a service). On the other hand, these technologies have the capabilities, if correctly integrated together (e.g., AI for fake news recognition in OSN) to create a solid line of defense.

[1] https://www.finoit.com/blog/top-15-sensor-types-used-iot/

[2] https://www.smartinsights.com/social-media-marketing/social-media-strategy/new-global-social-media-research/

[3] https://searchsecurity.techtarget.com/definition/social-engineering

[4] https://en.wikipedia.org/wiki/Sophia_(robot)

[5] https://www.blackhat.com/docs/us-16/materials/us-16-Seymour-Tully-Weaponizing-Data-Science-For-Social-Engineering-Automated-E2E-Spear-Phishing-On-Twitter-wp.pdf

[7] https://searchsecurity.techtarget.com/definition/dumpster-diving

[8] https://www.statista.com/statistics/471264/iot-number-of-connected-devices-worldwide/

by Davide Andreoletti (SUPSI) and Nathan Weiss (IAI ELTA Systems)